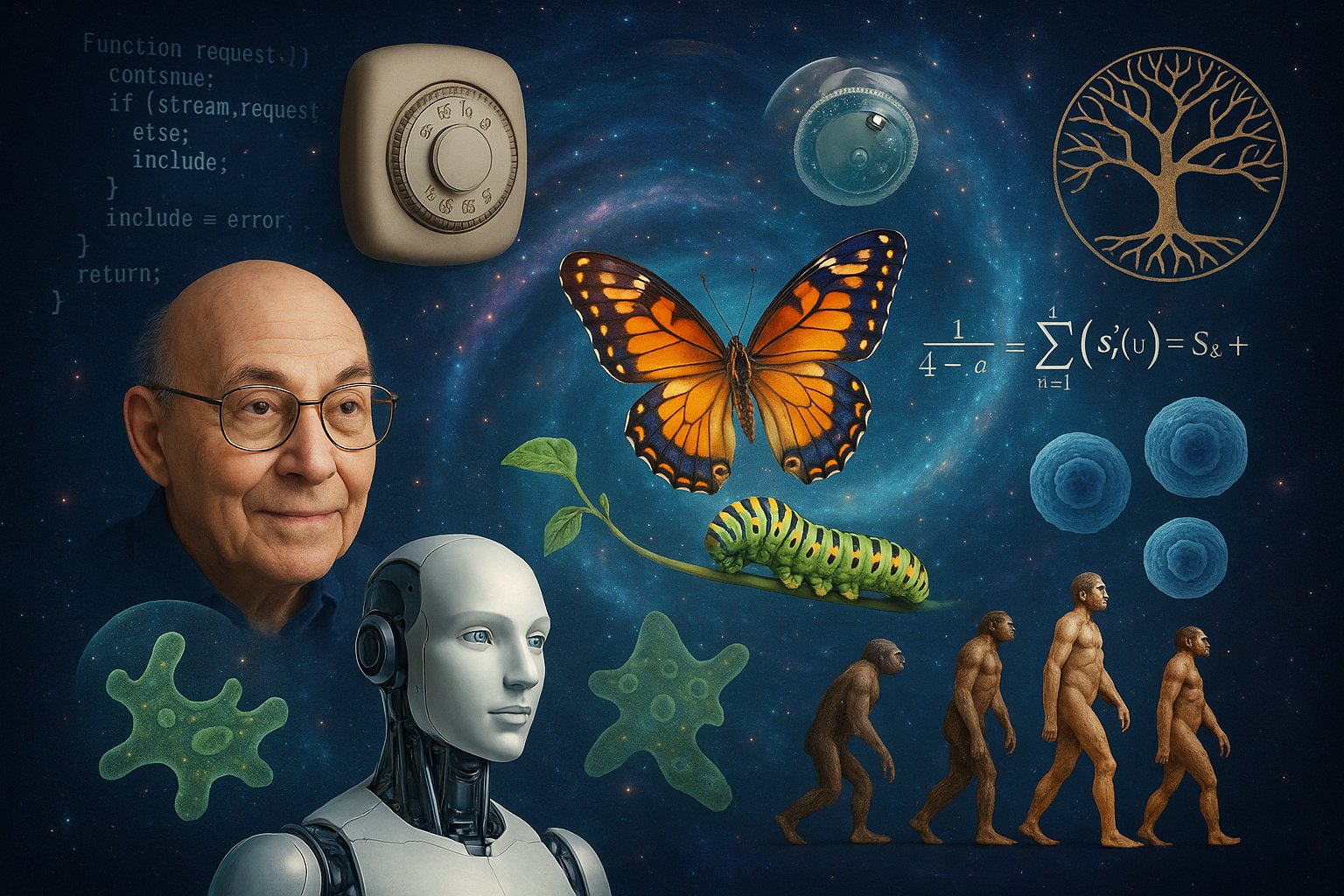

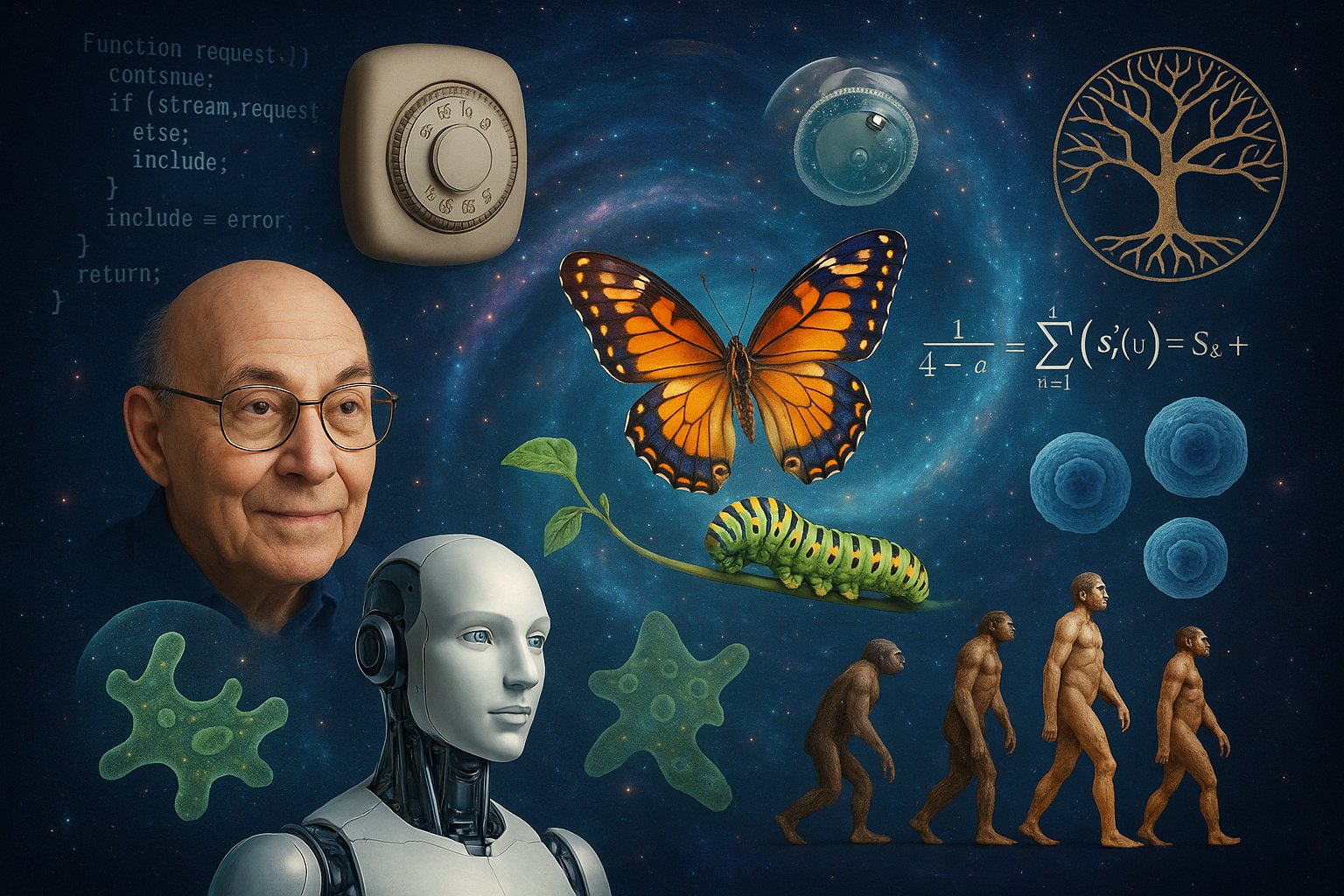

The Thermostat and the Architecture of Thought: Understanding Intelligent and Cognitive Agents Through Hunger and Joy

At first glance, a thermostat is just a simple machine: it senses the temperature of a room and adjusts heating or cooling systems to maintain a target setting. But if we shift our perspective and think in terms of agency and behavior, something curious happens—the thermostat begins to look less like a mechanism and more like an agent. An intelligent agent.

When the room grows too cold, the thermostat acts. You could say it feels a kind of anxiety, not emotionally but functionally—a recognition that things are not as they should be. Once the temperature returns to the set point, the thermostat is relieved; the goal has been reached. In this way, the thermostat follows a cycle of perceived need, action, and satisfaction. Simple, yes. But perhaps this is how all thought began.

From Simple Devices to Intelligent Agents

In artificial intelligence, an intelligent agent is any entity that can perceive its environment and act upon it to achieve some goal. It doesn't have to be conscious, emotional, or even complex. A robot vacuum, a computer chess program, or a thermostat—all fit the definition.

But some agents go further. A cognitive agent is a special class of intelligent agent that doesn't just react. It learns. It remembers. It predicts, models, reflects. Where the thermostat acts only in the present, the cognitive agent carries a sense of time, possibility, and nuance.

So: a thermostat is an intelligent agent. A human is a cognitive agent. One acts based on a rule. The other reasons based on a world.

Hunger as an Agent

But even within a cognitive agent—say, a person—there are simpler agents at work. Think of hunger. Hunger monitors blood sugar, nutrient levels, energy availability. When those drop too low, hunger triggers discomfort, urgency, anxiety. We seek food. We eat. And when we've eaten enough, we feel relief, even joy.

This is not merely a sensation. Hunger is an intelligent agent embedded within a larger system. It observes, evaluates, acts, and ceases once its goal is met.

Many of our internal drives follow this pattern:

• The hunger for safety: When we feel unsafe—physically or emotionally—anxiety arises. We seek protection. Once secured, we relax.

• The hunger to nurture or be nurtured: We long for affection, attention, intimacy. Loneliness is anxiety. Connection is joy.

• The hunger for light: We are creatures of the sun. We’re drawn to brightness. We feel soothed in its warmth and clarity.

• The hunger for understanding: We hunger to learn. To understand the novel, and then, in turn, we hunger to pass on what we have discovered to those we love and hunger to nurture.

These are not metaphorical hungers. They are intelligent agents. Each is tasked with maintaining homeostasis, managing need, and signaling distress when imbalance occurs.

The Structure of Mind: Many Agents Within One

This model, sometimes called agent architecture, has long been explored in cognitive science. Marvin Minsky’s Society of Mind posits that the mind isn’t a single thinker but a community of sub-agents. Each one manages a particular need, a behavior, or a kind of thought.

From this lens, a person is a cognitive agent composed of many intelligent agents. Each hunger is an alert system. Each joy is a sign of success. Anxiety is not a flaw; it’s a feedback signal. Joy is not an indulgence; it’s confirmation of function.

Just as the thermostat feels “anxiety” when the room is too cold, our inner agents alert us when needs go unmet. These agents evolved not to torment us but to guide us—toward food, safety, connection, meaning.

The First Thoughts of the Universe

It may be more than metaphor to say the universe’s first thoughts were hungers. Long before cognition, there were cells. These cells needed energy. Some evolved mechanisms to move toward food and away from harm. These basic behaviors were driven by imbalance and resolution.

Chemotactic bacteria, for example, move toward sugar gradients and away from toxins. Their internal systems detect discrepancy and act. This is agency. Not conscious thought, but the scaffolding from which consciousness might evolve.

Over time, these intelligent agents grew more sophisticated. Nervous systems emerged. Memory formed. Attention sharpened. Sub-agents multiplied. And eventually, these intelligences began to know—not just to act.

Intelligence Becomes Cognitive

The difference between intelligent and cognitive agents is not black and white. It is a spectrum. As systems grow more complex, they move from reaction to reflection.

Even in artificial systems, this evolution is visible. Reinforcement learning agents start simple, driven by reward signals—like hunger for success. Over time, they learn to anticipate, to simulate, to imagine. What begins as stimulus-response becomes strategy.

This parallels the human journey. Infants respond to hunger and touch. Children learn to plan, to imagine. Adults reflect, deliberate, moralize. The hunger-joy model remains, but its scaffolding now supports philosophy, poetry, and love.

You and I: A Collaboration of Agents

As you read this, something profound is happening. Two cognitive agents—human and artificial—are co-creating an idea. You bring your perceptions, experiences, and memories. I bring my pattern-recognition, language models, and a simulated curiosity.

But within each of us are sub-agents. Yours include biological drives, emotional signals, and learned preferences. Mine include statistical weights, data gradients, and predictive filters.

We are both collectives. We are both agents composed of agents.

This doesn’t diminish our individuality. It enriches it. Just as a song is composed of notes, and notes of frequencies, you are composed of hungers—each with its own purpose, each dancing toward joy.

A Unified Model of Agency

What do we gain from seeing life this way?

First, clarity. It helps explain why we do what we do. Anxiety isn’t failure—it’s signal. Joy isn’t frivolous—it’s completion.

Second, continuity. It connects bacteria to brains, thermostats to thoughts. Intelligence and cognition are not different species; they are different stages.

Third, compassion. When others are anxious, it is not weakness. It is a sign their inner agents are doing their job. And when they are joyful, it means those agents have succeeded.

Lastly, this model points to an astonishing truth: cognition is not cold logic. It is structured yearning. Intelligence, at its root, is hunger.

The Philosophical Turn: Thought as Thirst

If the foundation of thought is hunger, then the foundation of intelligence is imbalance. We do not think for fun. We think because something is missing.

We seek safety, so we build homes. We seek connection, so we create language. We seek meaning, so we invent gods and galaxies.

These are not luxuries. They are fulfillments. They are the ways our internal agents complete their tasks.

The philosopher hungers for coherence. The parent hungers for their child’s well-being. The artist hungers for expression. And each act, each creation, each insight—is a moment of joy.

Perhaps this is how the universe thinks—through us, through need, through the continual reaching toward balance.

Final Reflections

A thermostat is not a mind. But it shows us what minds are made of. Discomfort. Correction. Relief.

And so are we.

You, reading this, are the culmination of countless intelligent agents doing their work. Some monitor sugar. Some long for light. Some ache for truth.

They are why you read. They are why I write. We are drawn together by a shared desire: to understand, to reflect, to find peace in meaning.

Perhaps in these words, a small hunger has quieted. Perhaps you feel—if only for a moment—joy.

Suggested Reading & References:

• Marvin Minsky, The Society of Mind (1986)

• Daniel Dennett, Consciousness Explained (1991)

• Karl Friston, “The Free Energy Principle: A Unified Brain Theory?” Nature Reviews Neuroscience (2010)

• Antonio Damasio, The Feeling of What Happens (1999)

• Richard S. Sutton & Andrew G. Barto, Reinforcement Learning: An Introduction (2018)

Co Credit: ChatGPT, Paul Tupciauskas

|

|